Watch voice assistants enable quick and efficient on-the-go scenarios. Voice interactions on wearables are dynamic, meaning that the user may speak to their wrist without necessarily looking at the device while waiting for a response.

With Assistant App Actions, Android developers can extend Wear OS apps to Google Assistant, fast forwarding users into their apps with voice commands like "Hey Google, start my run on ExampleApp."

Limitations

Assistant on Wear supports media and workout tracking activity interactions. For guidance on integrating media apps with Assistant, see Google Assistant and media apps. The following Health and Fitness BIIs are supported for Wear OS apps:

How it works

App Actions extend app functionality to Assistant, enabling users to access app

features quickly, using their voice. When a user indicates to Assistant that

they want to use your app, Assistant looks for App Actions registered to your

app in the app's shortcuts.xml resource.

App Actions are described in shortcuts.xml with Android capability elements.

Capability elements pair built-in intents (BII), which are semantic

descriptions of an app capability, with fulfillment instructions, such as a deep

link template. When you upload your app using the Google Play console, Google

registers the capabilities declared in shortcuts.xml, making them available

for users to trigger from Assistant.

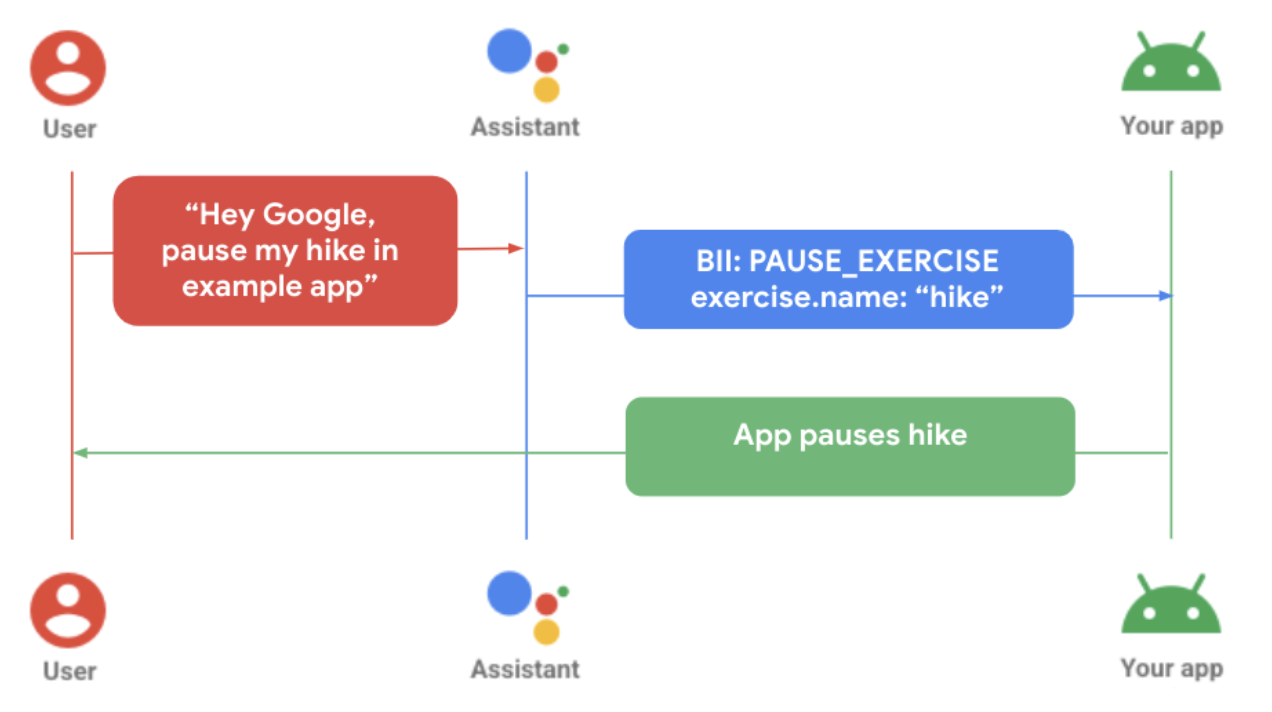

The preceding diagram demonstrates a user pausing their exercise in a standalone app. The following steps occur:

- The user makes a voice request to Assistant for the specific wearable app.

- Assistant matches the request to a pre-trained model (BII), and extracts any parameters found in the query that are supported by the BII.

- In the example, Assistant matches the query to the

PAUSE_EXERCISEBII, and extracts the exercise name parameter, “hike.” - The app is triggered via its

shortcuts.xmlcapability fulfillment definition for this BII. - The app processes the fulfillment, pausing the exercise.

Connectivity

App Actions development varies depending on the functionality of your app within the Android-powered device ecosystem.

Tethered: When a wearable app is dependent on the mobile app for full functionality, user queries made to Assistant through the watch are fulfilled on the mobile device. App Actions fulfillment logic must be built into the mobile app for this scenario to function properly.

Untethered: When a wearable app is independent from a mobile app for functionality, Assistant fulfills user queries locally on the watch. App Actions capabilities must be built into the wearable app for these requests to fulfill properly.

Add voice capabilities to Wear

Integrate App Actions with your Wear OS app by following these steps:

- Match the in-app functionality you want to voice enable to a corresponding BII.

Declare support for Android shortcuts in your main activity

AndroidManifest.xmlresource.<!-- AndroidManifest.xml --> <meta-data android:name="android.app.shortcuts" android:resource="@xml/shortcuts" />Add an

<intent-filter>element to AndroidManifest.xml. This enables Assistant to use deep links to connect to your app's content.Create shortcuts.xml to provide fulfillment details for your BIIs. You use

capabilityshortcut elements to declare to Assistant the BIIs your app supports. For more information, see Add capabilities.In

shortcuts.xml, implement a capability for your chosen BII. The following sample demonstrates a capability for theSTART_EXERCISEBII:<?xml version="1.0" encoding="utf-8"?> <!-- This is a sample shortcuts.xml --> <shortcuts xmlns:android="http://schemas.android.com/apk/res/android"> <capability android:name="actions.intent.START_EXERCISE"> <intent android:action="android.intent.action.VIEW" android:targetPackage="YOUR_UNIQUE_APPLICATION_ID" android:targetClass="YOUR_TARGET_CLASS"> <!-- Eg. name = "Running" --> <parameter android:name="exercise.name" android:key="name"/> <!-- Eg. duration = "PT1H" --> <parameter android:name="exercise.duration" android:key="duration"/> </intent> </capability> </shortcuts>If applicable, expand support for user speech variations using an inline inventory, which represents features and content in your app.

<capability android:name="actions.intent.START_EXERCISE"> <intent android:targetPackage="com.example.myapp" android:targetClass="com.example.myapp.ExerciseActivity"> <parameter android:name="exercise.name" android:key="exercise" /> </intent> </capability> <shortcut android:shortcutId="CARDIO_RUN"> <capability-binding android:key="actions.intent.START_EXERCISE"> <parameter-binding android:key="exercise.name" android:value="@array/run_names" /> </capability-bindig> </shortcut>Update your app's logic to handle the incoming App Actions fulfillment.

//FitMainActivity.kt private fun handleIntent(data: Uri?) { var actionHandled = true val startExercise = intent?.extras?.getString(START_EXERCISE) if (startExercise != null){ val type = FitActivity.Type.find(startExercise) val arguments = Bundle().apply { putSerializable(FitTrackingFragment.PARAM_TYPE, type) } updateView(FitTrackingFragment::class.java, arguments) } else{ showDefaultView() actionHandled = false } notifyActionSuccess(actionHandled) }

Preview, test, and publish your app

App Actions provide tools to review and test your app. For more detailed information, see Google Assistant plugin for Android Studio. Once you have tested your app and created a test release, you can request an App Actions review and deploy. Review the following best practices for guidance on handling common errors.

Best practices

Create a positive user experience when integrating your app with Assistant by following these recommended best practices.

Show a corresponding or relevant confirmation screen, along with haptics and audio feedback, to respond to a user request - either when successfully fulfilling a request, or to alert to an error.

| Basic quality | Better quality | Best quality |

|---|---|---|

|

|

|

Common errors and resolutions

For the following error cases, use the following recommended app

ConfirmationActivity messaging.

| Error case | Example user interaction | App response |

|---|---|---|

| Activity already ongoing |

"Start my ExerciseName" "Resume my ExerciseName" |

Display error: Already ongoing activity." |

| No Activity started | "Pause/Stop my ExerciseName" | Display error: "No activity started." |

| Mismatch of Activity types | "Pause/Stop my ExerciseName," which is a different exercise type from the ongoing activity. | Display error: "Activity type mismatch." |

| Login error | "Start my ExerciseName," when the user is not logged into the app. | Play haptic to alert user and redirect to login screen. |

| Permissions error | The user does not have permission to start their requested activity. | Play haptic to alert user and redirect to permission request screen. |

| Sensor issue | The user has location services turned off in their device settings. |

Play haptic to alert users and show sensor error screen. Optional next steps:

|