Google Assistant enables voice-forward control of Android apps. Using Assistant, users can launch apps, perform tasks, access content, and more by using their voice to say things like, "Hey Google, start a run on Example App."

As an Android developer, you can use Assistant's development framework and testing tools to easily enable deep voice control of your apps on Android-powered surfaces, such as mobile devices, cars, and wearables.

App Actions

Assistant's App Actions let users launch and control Android apps with their voice.

App Actions enable deeper voice control, enabling users to launch your apps and perform tasks like:

- Launching features from Assistant: Connect your app's capabilities to user queries that match predefined semantic patterns or built-in intents.

- Displaying app information on Google surfaces: Provide Android widgets for Assistant to display, offering inline answers, simple confirmations, and brief interactions to users without changing context.

- Suggesting voice shortcuts from Assistant: Use Assistant to proactively suggest tasks in the right context for users to discover or replay.

App Actions use built-in intents (BIIs) to enable these and dozens more use cases across popular task categories. See the App Actions overview on this page for details on supporting BIIs in your apps.

Multidevice development

You can use App Actions to provide voice-forward control on device surfaces beyond mobile. For example, with BIIs optimized for Auto use cases, drivers can perform the following tasks using their voice:

- Navigate to the nearest restaurant on their driving route

- Find the closest parking garage

- Locate nearby EV charging stations

App Actions overview

You use App Actions to offer deeper voice control of your apps to users by enabling them to use their voice to perform specific tasks in your app. If a user has your app installed, they can simply state their intent using a phrase that includes your app name, such as "Hey Google, start an exercise on Example App." App Actions supports BIIs that model the common ways users express tasks they want to accomplish or information they seek, such as:

- Start an exercise, send messages, and other category specific actions.

- Opening a feature of your app.

- Querying for products or content using in-app search.

With App Actions, Assistant can proactively suggest your voice capabilities as shortcuts to users, based on the user’s context. This functionality enables users to easily discover and replay your App Actions. You may also suggest these shortcuts in your app with the App Actions in-app promo SDK.

You enable support for App Actions by declaring <capability> tags in

shortcuts.xml. Capabilities tell Google how your in-app functionality can be

semantically accessed using BII and enable voice support for your features.

Assistant fulfills user intents by launching your app to

the specified content or action. For some use cases, you can specify an Android

widget to display within Assistant to fulfill the user query.

App Actions are supported on Android 5 (API level 21) and higher. Users can only access App Actions on Android phones. Assistant on Android Go does not support App Actions.

How App Actions work

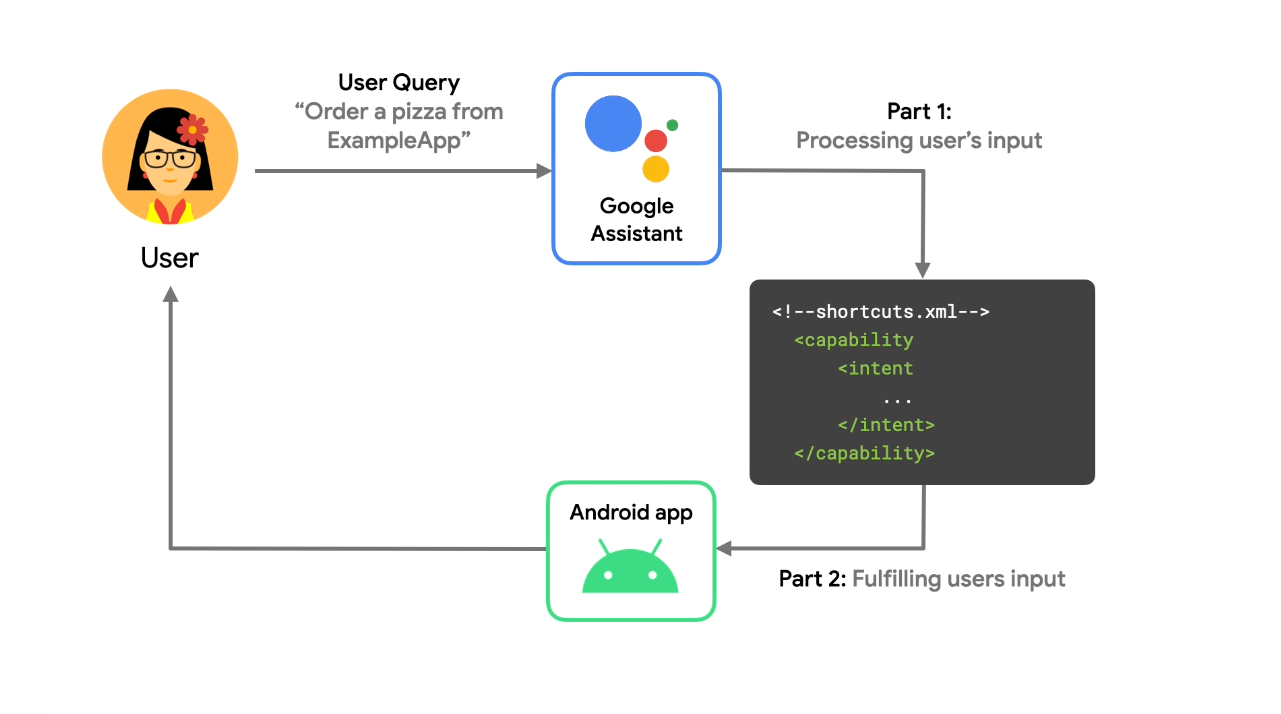

App Actions extend your in-app functionality to Assistant, enabling users to

access your app's features by voice. When a user invokes an App Action,

Assistant matches the query to a BII declared in your shortcuts.xml resource,

launching your app at the requested screen or displaying an Android widget.

You declare BIIs in your app using Android capability elements. When you upload your app using the Google Play console, Google registers the capabilities declared in your app and makes them available for users to access from Assistant.

For example, you might provide a capability for starting exercise in your app. When a user says, "Hey Google, start a run on Example App," the following steps occur:

- Assistant performs natural language analysis on the query, matching the

semantics of the request to the predefined pattern of a BII. In this case,

the

actions.intent.START_EXERCISEBII matches the query. - Assistant checks whether the BII was previously registered for your app and uses that configuration to determine how to launch it.

- Assistant generates an Android intent to launch the in-app destination of the

request, using information you provide in the

<capability>. Assistant extracts the parameters of the query and passes them as extras in a generated Android intent. - Assistant fulfills the user request by launching the generated Android

intent. You configure the

intentto launch a screen in your app or to display a widget within Assistant.

After a user completes a task, you use the Google Shortcuts Integration Library to push a dynamic shortcut of the action and its parameters to Google, enabling Assistant to suggest the shortcut to the user at contextually relevant times.

Using this library makes your shortcuts eligible for discovery and replay on Google surfaces, like Assistant. For instance, you might push a shortcut to Google for each destination a user requests in your ride sharing app for quick replay later as a shortcut suggestion.

Build App Actions

App Actions build on top of existing functionality in your Android app. The

process is similar for each App Action you implement. App Actions take users

directly to specific content or features in your app using capability elements

you specify in shortcuts.xml.

When you build an App Action, the first step is to identify the activity you want to allow users to access from Assistant. Then, using that information, find the closest matching BII from the App Actions BII reference.

BIIs model some of the common ways that users express tasks they want to do using an app or information they seek. For example, BIIs exist for actions like starting an exercise, sending a message, and searching within an App. BIIs are the best way to start with App Actions, as they model common variations of user queries in multiple languages, making it easy for you to quickly voice enable your app.

Once you identify the in-app functionality and BII to implement, you add or

update the shortcuts.xml resource file in your Android app that maps the BII

to your app functionality. App Actions defined as capability elements in

shortcuts.xml describe how each BII resolves its fulfillment, as well as

which parameters are extracted and provided to your app.

A significant portion of developing App Actions is mapping BII parameters into your defined fulfillment. This process commonly takes the form of mapping the expected inputs of your in-app functionality to a BII's semantic parameters.

Test App Actions

During development and testing, you use the Google Assistant plugin for Android Studio to create a preview of your App Actions in Assistant for your Google Account. This plugin helps you test how your App Action handles various parameters prior to submitting it for deployment. Once you generate a preview of your App Action in the test tool, you can trigger an App Action on your test device directly from the test tool window.

Media apps

Assistant also offers built-in capabilities to understand media app commands, like "Hey Google, play something by Beyonce," and supports media controls like pause, skip, fast forward, and thumbs up.

Next steps

Follow the App Actions pathway to build an App Action using our sample Android app. Then, continue on to our guide to build App Actions for your own app. You can also explore these additional resources for building App Actions:

- Download and explore our sample fitness Android app on GitHub.

- r/GoogleAssistantDev: The official Reddit community for developers working with Google Assistant.

- If you have a programming question about App Actions, submit a post to Stack Overflow using the "android" and "app-actions" tags. Before posting, ensure your question is on topic and that you've read the guidance for how to ask a good question.

- Report bugs and general issues with App Actions features in our public issue tracker.